TLDR: As an open-source enthusiast > I’m amazed that we’re capable of running something as powerful as Llama 3 locally & that WE control the data. Let me show you how.

Step 1: Install Docker Desktop on your machine (if you haven’t already)

https://www.docker.com/products/docker-desktop/

Why use Docker? Instead of wasting time writing 3 separate articles that handle the nuances of each different OS (MacOS, Linux, or Windows) > we make use of one source of truth (universal environment) as defined in a Dockerfile to get things situated and running.

^ This is a simplified explanation. I may revisit this when I have a better answer.

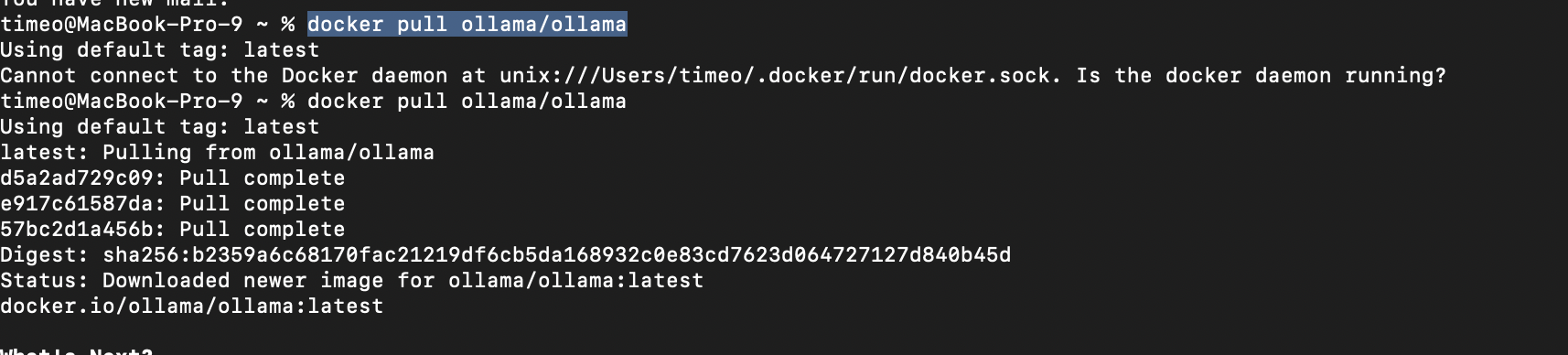

Step 2: In your terminal, paste and enter this command to get Ollama installed on your system:

docker pull ollama/ollama

You should see something similar to this after

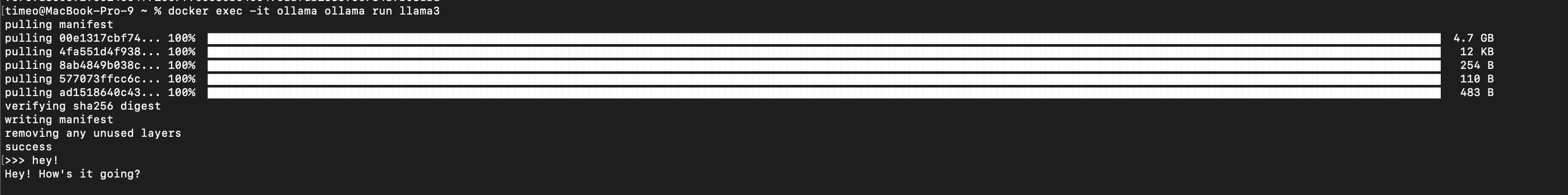

Step 3: Now that it’s installed, let’s run it by entering this:

docker run -d -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama  Boom! You know can communicate with one of the smartest LLMs on the planet OFFLINE on your computer. Welcome to the club. Perhaps you’ll ask a more informative input than just ‘hey!’

Boom! You know can communicate with one of the smartest LLMs on the planet OFFLINE on your computer. Welcome to the club. Perhaps you’ll ask a more informative input than just ‘hey!’

Bonus Points!

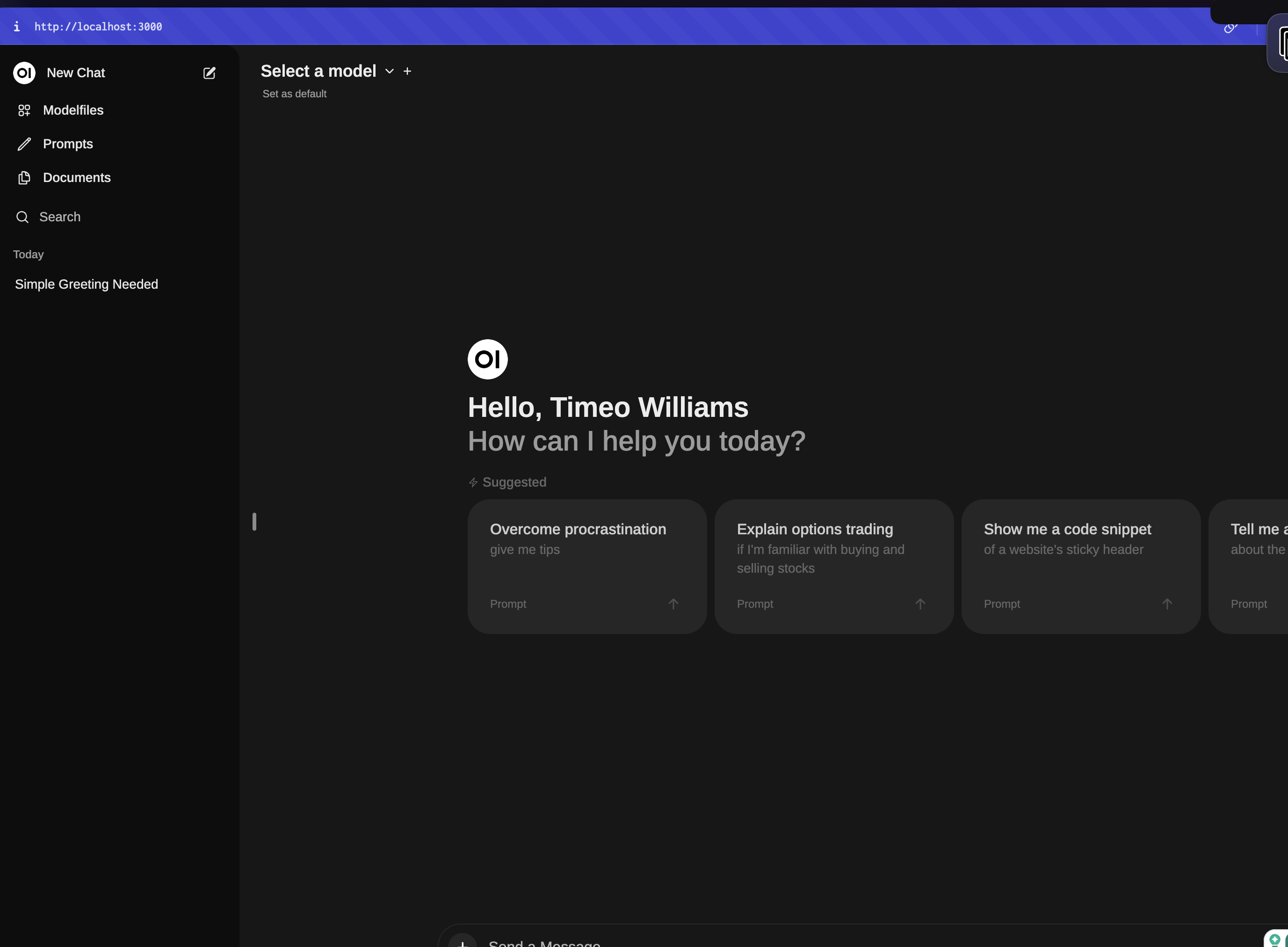

If you’ve made it this far, but are feeling dissatisfied, you probably miss how intuitive the experience is when chatting with ChatGPT in it’s website form.

Let’s make something similar.

Shoutout to the contributors of WebUI. They’ve helped make a beautiful interface so that those of us who aren’t terminal lovers can live in peace.

In the same terminal window or the environment of your choice (perhaps VSCode?), enter this:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

After a minute, you’ll be welcomed to this:

Select the Ollama model above and chat away!