Authors:

(1) Gonzalo J. Aniano Porcile, LinkedIn;

(2) Jack Gindi, LinkedIn;

(3) Shivansh Mundra, LinkedIn;

(4) James R. Verbus, LinkedIn;

(5) Hany Farid, LinkedIn and University of California, Berkeley.

Table of Links

3. Model

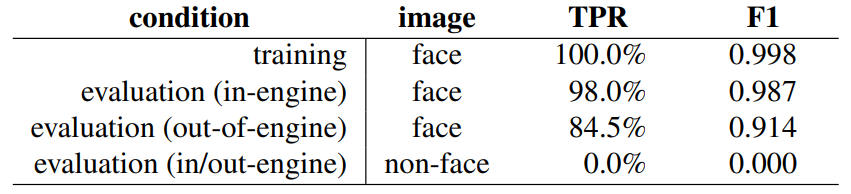

We train a model to distinguish real from AI-generated faces. The underlying model is the EfficientNet-B1[7] convolutional neural network [30]. We found that this architecture provides better performance as compared to other stateof-the-art architectures (Swin-T [22], Resnet50 [14], XceptionNet [7]). The EfficientNet-B1 network has 7.8 million internal parameters that were pre-trained on the ImageNet1K image dataset [30].

Our pipeline consists of three stages: (1) an image preprocessing stage; (2) an image embedding stage; and (3) a scoring stage. The model takes as input a color image and generates a numerical score in the range [0, 1]. Scores near 0 indicate that the image is likely real, and scores near 1 indicate that the image is likely AI-generated.

The image pre-processing step resizes the input image to a resolution of 512×512 pixels. This resized color image is then passed to an EfficientNet-B1 transfer learning layer. In the scoring stage, the output of the transfer learning layer is fed to two fully connected layers, each of size 2,048, with a ReLU activation function, a dropout layer with a 0.8 dropout probability, and a final scoring layer with a sigmoidal activation. Only the scoring layers – with 6.8 million trainable parameters – are tuned. The trainable weights are optimized using the AdaGrad algorithm with a minibatch of size 32, a learning rate of 0.0001, and trained for up to 10,000 steps. A cluster with 60 NVIDIA A100 GPUs was used for model training.

This paper is available on arxiv under CC 4.0 license.

[7] We are describing an older version of the EfficientNet model which we have previously operationalized on the LinkedIn that has since been replaced with a new model. We recognize that this model is not the most recent, but we are only now able to report these results since the model is no longer in use.