This paper is available on arxiv under CC BY-NC-SA 4.0 DEED license.

Authors:

(1) Yejin Bang, Centre for Artificial Intelligence Research (CAiRE), The Hong Kong University of Science and Technology;

(2) Nayeon Lee, Centre for Artificial Intelligence Research (CAiRE), The Hong Kong University of Science and Technology;

(3) Pascale Fung, Centre for Artificial Intelligence Research (CAiRE), The Hong Kong University of Science and Technology.

Table of Links

- Abstract and Intro

- Related Work

- Approach

- Experiments

- Conclusion

- Limitations, Ethics Statement and References

- A. Experimental Details

- B. Generation Results

Abstract

Framing bias plays a significant role in exacerbating political polarization by distorting the perception of actual events. Media outlets with divergent political stances often use polarized language in their reporting of the same event. We propose a new loss function that encourages the model to minimize the polarity difference between the polarized input articles to reduce framing bias. Specifically, our loss is designed to jointly optimize the model to map polarity ends bidirectionally. Our experimental results demonstrate that incorporating the proposed polarity minimization loss leads to a substantial reduction in framing bias when compared to a BART-based multi-document summarization model. Notably, we find that the effectiveness of this approach is most pronounced when the model is trained to minimize the polarity loss associated with informational framing bias (i.e., skewed selection of information to report).

1. Introduction

Framing bias has become a pervasive problem in modern media, misleading the understanding of what really happened via a skewed selection of information and language (Entman, 2007, 2010; Gentzkow and Shapiro, 2006). The most notable impact of framing bias is the amplified polarity between conflicting political parties and media outlets. Mitigating framing bias is critical to promote accurate and objective delivery of information.

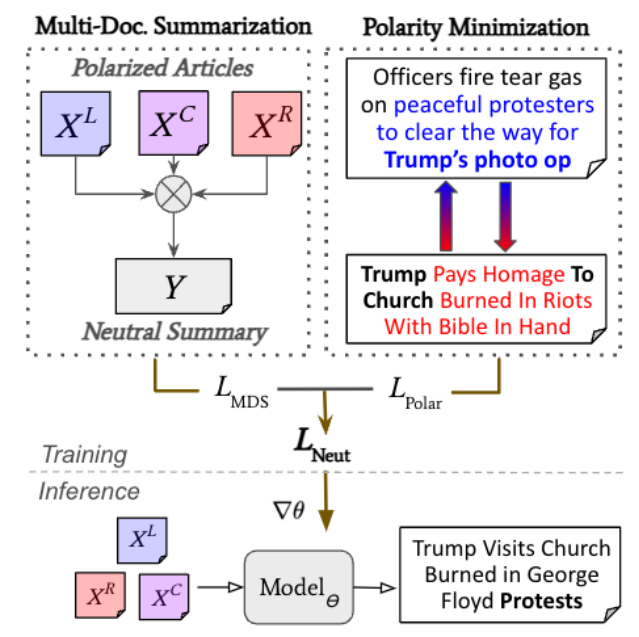

One promising mitigation paradigm is to generate a neutralized version of a news article by synthesizing multiple views from biased source articles (Sides, 2018; Lee et al., 2022). To more effectively achieve news neutralization, we introduce a polarity minimization loss that leverages inductive bias that encourages the model to prefer generation with minimized polarity difference. Our proposed loss trains the model to be simultaneously good at mapping articles from one end of the polarity spectrum to another end of the spectrum and vice versa as illustrated in Fig. 1. Intuitively, the model is forced to learn and focus on the shared aspect between contrasting polarities from two opposite ends.

In this work, we demonstrate the effectiveness of our proposed loss function by minimizing polarity in different dimensions of framing bias – lexical and informational (Entman, 2002). Lexical polarization results from the choice of words with different valence and arousal to explain the same information (e.g., "protest" vs "riot"). Informational polarization results from a differing selection of information to cover, often including unnecessary or unrelated information related to the issue being covered. Our investigation suggests that learning the opposite polarities that are distinct in the informational dimension enables the model to acquire a better ability to focus on common ground and minimize biases in the polarized input articles. Ultimately, our proposed loss enables the removal of bias-inducing information and the generation of more neutral language choices.